Waseem Ali, CEO of Rockborne

Amid great media fanfare, tech giants and key government figures came together earlier this month for the UK’s AI Safety Summit. The summit was the first of its kind and included key political figures such as US vice-president Kamala Harris and the UN Secretary-General António Guterres, as well as the likes of Google DeepMind CEO Demis Hassabis, OpenAI CEO Sam Altman, and of course, Elon Musk.

The risks arising from AI do not occur in a vacuum, in fact they are inherently international in nature and are therefore best addressed through international cooperation. With this in mind, many hope that the summit will mark the first major step in kickstarting regular cross-country dialogue around the topic.

The UK government set out five key objectives for the summit:

- Develop a shared understanding of the risks posed by frontier AI and the need for action.

- Put forward process for international collaboration AI safety, including how best to support national and international frameworks.

- Propose appropriate measures which individual organisations should take.

- Identify areas for potential collaboration on AI safety research.

- Showcase how ensuring the safe development of AI will enable AI to be used for good globally.

What was agreed upon during The AI Safety Summit?

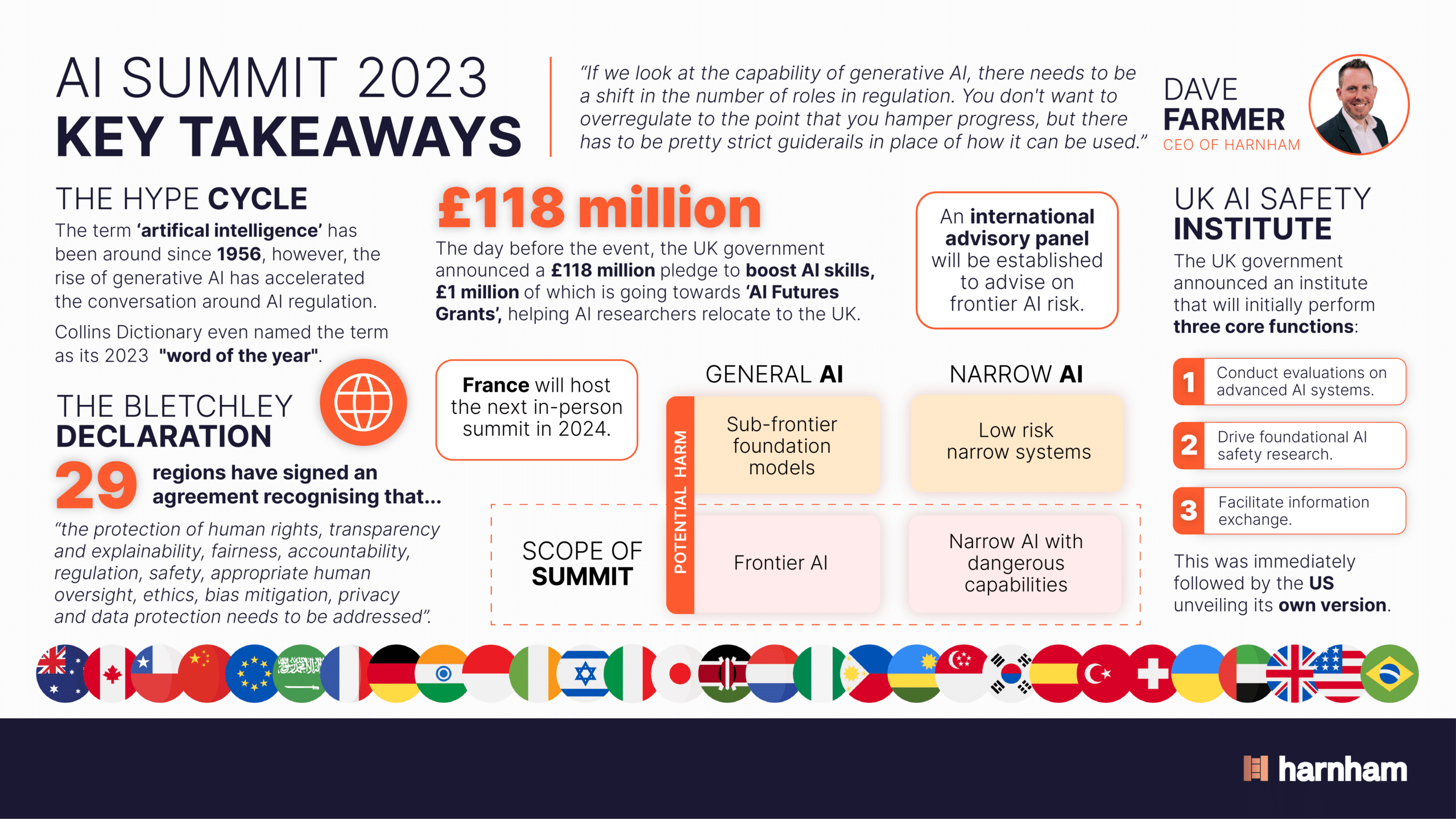

The most tangible outcome was the signing of the Bletchley Declaration by all 28 governments in attendance, as well as the European Union (EU) recognising that “the protection of human rights, transparency and explainability, fairness, accountability, regulation, safety, appropriate human oversight, ethics, bias mitigation, privacy and data protection needs to be addressed”.

The declaration outlined a shared approach to addressing the risks of “frontier” AI – defined as any highly capable general-purpose AI model that can perform a wide variety of tasks. It also noted that the AI Safety Summit presented “a unique moment to act and affirm the need for the safe development of AI and for the transformative opportunities of AI to be used for good and for all, in an inclusive manner in our countries and globally”.

The focus of this group of countries will therefore be to identify AI safety risks of shared concern, build a communal scientific and evidence-based understanding of these risks and sustain that understanding as capabilities continue to develop. This collaborative effort at the AI Safety Summit contributes to the broader goal of ensuring that AI development aligns with principles such as human rights, transparency, fairness, and accountability, as outlined in the Bletchley Declaration. The discussions and resolutions made at the AI Safety Summit serve as a cornerstone in fostering international cooperation and setting a collective agenda for addressing the challenges posed by advancing AI technologies.

Yes to regulation, but when?

The summit conveyed a general consensus that regulation is necessary and an acceptance that tech firms shouldn’t be left to mark their own homework. And already, countries are establishing their own national stance and timeline on this.

On the first day UK government announced that it was launching a UK AI Safety Institute, immediately followed by the US unveiling its own version. In Japan, the G7 group of industrialised countries issued a joint statement on the importance of regulating AI. Meanwhile the EU seems poised to be the first to pass an official ‘AI act.’

Whilst there may be a consensus on direction of travel, the matter of timing will fall to the discretion of each individual country as they establish their own pace.

Approaches may also differ depending on the priorities identified. It’s likely that areas identified as higher risk due to the sensitive nature of the data involved, such as the public sector, military and banking, will see a greater initial regulatory focus, rather than governments attempting to take a blanket-approach to guidelines.

So why was the AI summit needed?

The technical side of AI has never presented a major challenge to growth. The tech business community has the right people, able to build the right tech at the right time, as has been shown with other technical innovations which, of course, the biggest cloud providers and tech companies will drive.

The real challenge for leaders is the governance and management of data – how they can safely use AI and protect customer and employee personal data. Over the coming months, we’re likely to see increasing numbers of businesses reassuring consumers of their security. Many will be following in the footsteps of figures such as Marc Benioff, CEO of Salesforce, who released a statement about the company’s commitment to consumer data protection and refusal to sell their customers’ data. Following the summit, we are likely to see a barrage of similar statements emerge in the space.

Managing risk

Whilst the term ‘artificial intelligence’ has been around since 1956, its more recent iterations – generative AI for example – have passed into the mainstream. And as it moves further into the public consciousness, it becomes increasingly crucial that regulation and safety dominates the conversation.

AI’s increasing accessibility to the general population, combined with its rich potential, creates the ideal conditions for a constant stream of new uses to be discovered. But with these new applications comes new opportunities for exploitation. Regulation racing to catch up with innovation is not new – technology usually develops faster than guidelines can – but it’s the speed and promise that generative AI presents that makes safeguarding and cyber risks particularly crucial to nail down now.

Take self-driving car technology for example. Imagine that you are on a motorway in a self-driving car going 80 miles per hour and someone hacks into your car with dishonest intentions. Or picture an AI model being used to make business decisions in a regulated industry and someone hacks into the data system to changes process. Or consider the risks from child safeguarding perspective with increasing numbers of young people active online. There are so many elements that it’s critical that it's handled in the right way.

With every new use for AI comes greater possibilities for cybercriminals to hack into increasingly valuable data, so there needs to be a discussion around how we innovate at pace, how we regulate that innovation, and how we protect that innovation from a cyber safeguarding perspective.

Regulation vs Innovation

The ever-present challenge of new innovation is striking a balance between regulation and tech evolution. While clamping restrictions and legislation around a developing bubble of tech will stifle its growth, without some safety measures, you are essentially opening the door to risk.

It’s not about stamping out enthusiasm. Instead we must find ways to harness the fervour while also building data governance and safety policies into the very structure of the movement, rather than it being an afterthought.

The problem is compounded by the fact that AI has huge potential for sectors such as life-saving healthcare, and with my background in healthcare, it’s hard to ignore the possibility of regulation impeding potentially lifesaving development. Say there’s a model created in AI that helps predict early detection in cancer or a life-threatening disease, what should the balance between the regulation and ethics look like?

Personally, if I was leading the country on AI, I would be starting my AI approach by exploring how can we safely use data to help healthcare, particularly given the fact that the NHS waitlist is predicted to reach 8 million by the summer of 2024. AI has the potential to be proactive and revolutionary in this field; saving lives, extending life expectancies, and allowing people to live a more comfortable existence.

The Great Ethics debate

In the aftermath of the summit, the ethnics debate continues to rage on, fuelled by statements such as Musk’s inflammatory comment that ‘AI presents the biggest risk to humanity.’ Personally, I believe this presents an opportunity for all those involved to prove this theory wrong. If AI really does present the biggest risk, people won’t just sit by and watch the world burn, they’ll find a way to stop it. Industries just need to find ways to adapt, re-skill and work alongside new tech rather than at odds with it.

Setting out a road map

Big tech players will be the drivers of innovation and they're going to do it fast. They need to work hand in glove with local government to make sure that it is actually deployable and that organisations are benefiting from it.

The White House recently released a paper exploring protection within AI, for areas such as safeguarding in the military. I believe it would be beneficial to curate something similar in the UK, outlining the areas that need the greatest focus and what protections should be implemented first, such as reducing cybercrime in the public sector.

Looking to break into the data industry or upskill into a new area of data and tech? Learn more about Rockborne’s data training programme.